The Impact of Windows Server 2016 on Data Recovery (Pt. 1)

Microsoft released its final Windows Server 2016 version a few weeks ago. It comes in three editions – Windows Server 2016 Standard, Windows Server 2016 Datacenter, and Windows Server 2016 Essentials. Additionally, two Windows Storage Server 2016 Editions – Workgroup and Standard – were also released. Those editions are bundled with manufacturer hardware only. Microsoft claims it to be the basis for the future and many experts say that it has several advantages that companies should consider. For example, in Windows Server 2016, new security layers are implemented into the IT structure to better recognize and defend threats. More flexibility and stability is promised with the provision of virtual environment based on HyperV.

With a new network stack, Windows Server 2016 provides fully integrated basic networking functionality as well as the SDN Architecture of Microsoft Azure. Additionally, the new server OS is based on the concept of SDS (Software defined storage), which has the advantage that the memory requirements and new storage can be easily added within the whole server structure. Version 2016 offers various tools for dynamic management of computing, networking, storage and security. And last but not least: Windows Server 2016 promises more fault tolerance. Microsoft claims itself: “When hardware fails, just swap it out; the software heals itself, with no complicated management steps.”\

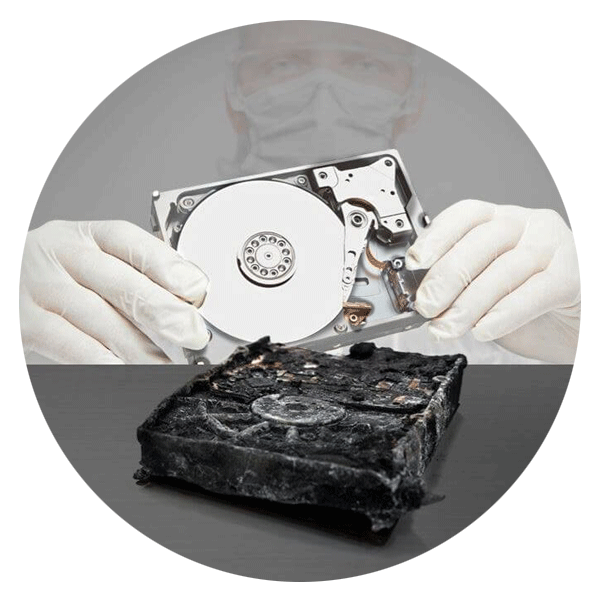

So, if the server OS is so good, why bother not using it? Well, at least some points should be considered. Because we are looking from a data recovery perspective on any system, we want to at least focus on some new technologies which could have an impact on a data loss and a successful data recovery: Those are the Resilient File System (ReFS) in its new version 3 as well as the concept of Storage Spaces Direct – the successor of Storage Spaces first introduced in Windows Server 2012. Let´s begin with ReFS:

ReFS (Resilient File System): Improvement or big challenge in the end?

The new version of Microsoft´s new file system- ReFS version 3 - implemented in Windows Server 2016 offers a new challenge for both the users as well as data recovery experts. There are not many experts available worldwide who have the necessary knowledge to recover lost data which is stored inside this new file system. ReFS is a proprietary technology, which means that Microsoft has not disclosed the technical specifications and there’s a lot of reverse engineering needed to analyze the file system and create proper tools to access the data within.

ReFS has been introduced to safely store large amount of data. One thing to remember: It’s a file system to store data on, not to run the OS. Therefore, it’s designed for use in systems with large data sets, providing efficient scalability and availability in comparison with NTFS (New Technology File System). Data integrity was one of the main new features added, allowing critical data to be protected from common errors that can cause data loss. If a system error occurs, ReFS can recover from the error without the risk of data loss and also without affecting the volume availability. Media degradation is also another issue that was addressed to prevent data loss when a disk wears out.

One of the main benefits of using Windows Server 2016 with ReFS is that the system automatically creates checksums for the metadata stored on a volume. Any mismatch of the checksum results in an automatic repair of the metadata. What makes it a really awesome feature is that user data can also be secured against failure by combining them with checksums. If a wrong checksum is found, the file will be repaired. This function is named Integrity Stream. This security feature can be activated for the whole volume, for specific folder, or for individual files.

Microsoft claims that “When ReFS is used in conjunction with a mirror space or a parity space, detected corruption—both metadata and user data, when integrity streams are enabled—can be automatically repaired using the alternate copy provided by Storage Spaces.” Microsoft adds, “With ReFS, if corruption occurs, the repair process is both localized to the area of corruption and performed online, requiring no volume downtime. Although rare, if a volume does become corrupted or you choose not to use it with a mirror space or a parity space, ReFS implements salvage, a feature that removes the corrupt data from the namespace on a live volume and ensures that good data is not adversely affected by nonrepairable corrupt data.” What that means is that Microsoft implemented features for self-healing of corrupt data and files.

As we have pointed out in a previous article on ReFS , the structure it uses works like a database so it is completely different from an NTFS recovery that uses a flat table of metadata. To find data, recovery experts have to traverse ReFS like a database, opening tables which contain another set of tables, etc. Another problem that can be quite challenging when it comes to data recovery is the new ReFS file and volume sizes. A single file on a volume can become 16 exabytes (That’s 16 million terabytes!) and a ReFS volume can become as large as one yottabyte (That’s 1 trillion terabytes!). When you consider this fact, it becomes quite clear that this enormous storage space is also the danger of this technology. Imagine just one single corrupted 16 million terabyte file - and you’re tasked with recovering the data. If you don’t recover the one file, the whole file system is broken along with the volumes included. It’s clear that the data recovery will not only become difficult, but also very, very time consuming.

Now that we’ve explained the impact of ReFS on data structure in Windows Server and data recovery, the second part of this article will focus on the next most important technological development in this new OS – Storage Spaces Direct.

Call for Immediate Assistance!

- Crypto Currency (2)

- Data Backup (9)

- Data Erasure (8)

- Data Loss (15)

- Data Protection (11)

- Data Recovery (22)

- Data Recovery Software (2)

- Data Security (3)

- Data Storage (15)

- Degaussing (1)

- Deleted Data (5)

- Digital Photo (2)

- Disaster Recovery (6)

- Encryption (1)

- Expert Articles (5)

- Hard Drive (7)

- Laptop/Desktop (7)

- Memory Card (5)

- Mobile Device (13)

- Ontrack PowerControls (1)

- Raid (5)

- Ransomware & Cyber Incident Response (6)

- Server (6)

- SSD (14)

- Tape (17)

- Virtual Environment (9)