Block Storage vs. File Storage vs. Object Storage (Pt. 2)

In a previous blog post, we showed the need for a new storage concept for the ever growing amount of data in the Big Data Age. We showed that the traditional storage methods, File Storage and Block Storage, cannot handle these growing numbers of data because of overhead in file structure and hierarchy information added there. The solution to this challenge in data storage is Object storage. But what about data protection and data recovery? How does this new approach take care against data loss? Find out now:

Erasure coding as a security measure against data loss in Object based storage systems

For a long time, RAID was a good choice to be safe from data loss due to a hardware failure from a broken hard disk or more (RAID 6). The concept of parity calculation and recovering data this way works fine with smaller disk size that were common over the last years. With disk sizes approaching 10 Terabytes or more, a recovery of a full disk might take months in the future. And determining the exact recovery time before is not that easy as it depends on the system's existing workload. That's the reason why RAID is not used as data security or data recovery method in Object storage systems. It would be impossible to regain data in a manageable time frame. And another problem can occur: When another disk fails during a RAID rebuild process data will be lost for good without any chance to recover them again. That is why for huge storages and their disks Erasure coding was introduced.

As pointed out before, objects can be anywhere in a working Object storage system: on premises, on many different sites, or in the Cloud. Access to objects is accessible through their Internet-compatible structure via any device with Internet access. To make the objects more secure against data loss Erasure Coding comes into play:

Erasure coding is similar to a classical RAID recovery. Here too, additional information is created out of the to be saved objects. In a system with Erasure Coding (EC) the Objects are split into parts. These data blocks are typically several megabytes in size and therefore much bigger than the blocks that are created normally in a RAID protected system.

Each data block is then analyzed and in addition to the original data block several smaller fragments are created. To recover original data a minimum amount of these so-called sherds (block parts) are needed. For example if with EC a data block "creates" 16 fragments, where 12 (regardless which ones are available) of them are needed to rebuild the original information.

For creating these fragments, a special mathematical formular – the XOR-Scheduling Algorithm – is used. For more information you should check out the original thesis on it: http://nisl.wayne.edu/Papers/Tech/dsn-2009.pdf .

Splitting data and the objects into fragments has some advantages: Given that these fragments are saved on different disks or at different locations, a failure on a drive does not lead to data loss. And even better: Since not everything of the original data has to be rebuild, but only a mandatory number of fragments the whole recovery process is a lot faster than its RAID counterpart. Additionally it is much easier to store fragments on another storage location as compared to storing a full backup on an external storage system. The administrator only has to make sure that enough fragments are available when they are needed.

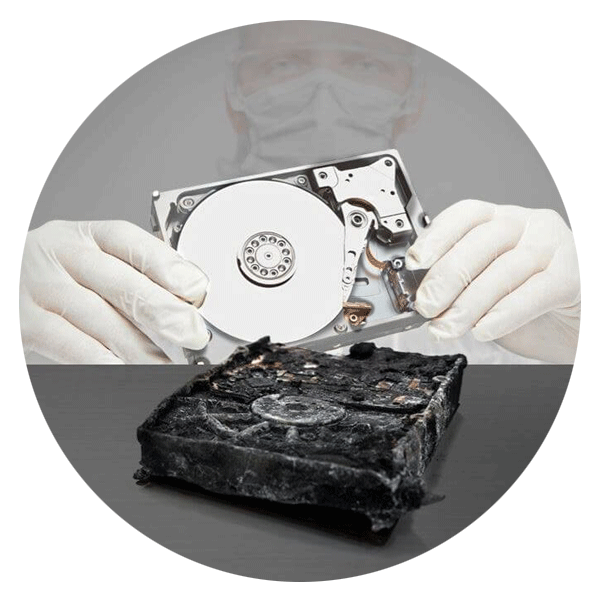

Are data recovery engineers capable of recovering data from object storages?

Because of the fact that these systems are highly secure against failures, none of these new object storage systems have found their way into one of the many data recovery labs of Ontrack around the world until now.

However what Ontrack Data recovery engineers already were able to successfully recover was data from a beta version of an EMC Isilon storage system especially designed to hold vast amounts of big data. Simply put an Isilon system is based on the concept of storing all data in a so-called "data lake" that spreads across a lot of hard disks drives. The idea of storing data inside a big data lake by EMC is comparable to the concept of object storage. Additionally, the EMC system works with a special file system which is specifically designed for big data and the concept of a data lake.

In a data loss case with a beta version of such a system a kernel panic occured and several hard disks showed failures. Even though it EMC was able to recover most of the data themselves with the normal build-in recovery tools, a consistency check showed that several disks were faulty and therefore not all of the data could be rescued this way.

To recover the data Ontrack engineers from the R&D department developed special tools to analyse an existing OneFS drive quickly and to find missing or corrupt data structures. After the engineers finally knew about the original structure of the system, they were able to recover almost all of the 4 million files that went missing.

This example shows that modern data recovery is basically capable of recovering lost data from a object storage system in the highly unlikely case that the build-in data recovery tools and safety features will fail.

However, as the case shows it will take a huge effort to dig deep into the systems structure, manage to analyze and unpack several layers of data or trying to put together parts on different disks until finally the original data can be accessed.

And there are some other challenges in an object storage system that need to be addressed: Since the address management of the data in an object storage system is done in the application itself, it means that in such a case a specific solution has to be developed which helps the engineers to find out where consistent data blocks are stored on the hundreds of of hard disks or where missing data blocks have to be recovered for example via Erasure Coding or special data recovery techniques.

Call for Immediate Assistance!

- Crypto Currency (2)

- Data Backup (9)

- Data Erasure (8)

- Data Loss (15)

- Data Protection (11)

- Data Recovery (22)

- Data Recovery Software (2)

- Data Security (3)

- Data Storage (15)

- Degaussing (1)

- Deleted Data (5)

- Digital Photo (2)

- Disaster Recovery (6)

- Encryption (1)

- Expert Articles (5)

- Hard Drive (7)

- Laptop/Desktop (7)

- Memory Card (5)

- Mobile Device (13)

- Ontrack PowerControls (1)

- Raid (5)

- Ransomware & Cyber Incident Response (6)

- Server (6)

- SSD (14)

- Tape (17)

- Virtual Environment (9)