30 years and counting - will RAID systems ever get old?

It is not often in the IT business that a technology which has been developed many decades ago is still widely used and important for administrators and other users. Even modern servers and storages run with RAID technology inside - mostly in enterprises, but more and more in consumer NAS systems as well.

Especially in the information technology field; three decades is a really long period of time, during which many concepts and products have been developed, put to market and are now long gone. RAID systems have survived and now celebrates becoming over years old. But why is it still such an important concept?

The origin of RAID systems

David Patterson, Garth A. Gibson and Randy Katz from the University of California in Berkeley invented the term RAID in 1987 and published their paper about the “Case for Redundant Arrays of inexpensive Disks” at the SIGMOD conference in June of 1988. At this time, hard drives were still quite expensive and trying to keep data storage systems ‘lean’ was not only common, but a necessity.

What’s more, it was still the time when huge mainframe computers were used in companies, as desktop computers had not yet been widely introduced into the workplace. However, this started to change and personal computers grew in sales and usage. As a consequence, hard disk drives for these first ‘non-mainframe-computers’ were already much cheaper than those from the much bigger mainframe computers of the time.

That is the reason why the concept of RAID was developed; they argued that several connected and cheaper (PC) hard drives would beat a single mainframe hard drive in terms of performance. Even though using multiple hard drives at the same time would cause the failure rate to rise, it is possible to configure them for redundancy so that the reliability of such an array could far exceed that of any large single mainframe drive. RAID is therefore the exact contrary to the then common SLED (Single Large Expensive Disk) from a mainframe computer.

RAID explained

To keep it simple: RAID is based on the concept that data is spread or replicated across multiple inexpensive (or independent) drives. Drives within the system are configured so that data can be divided or replicated over two or more drives for load distribution or to help recover data if a drive fails. There are two technical ways to achieve that: either by a hardware solution, a dedicated RAID controller, or a software solution which is mostly already included in modern operating systems.

Hardware-based systems manage the RAID independently from the host computer using a RAID controller, so the operating system is unaware of the technical workings of the RAID and sees the whole storage system as if it were a single volume connected to the host computer.

Besides these technical implementations the RAID concept is based on these three fundamental principles:

- Parity is a way of distributing information across a RAID system which allows data to be restored in the case of a drive failure.

- Redundancy is the duplication of critical components in the system architecture to increase reliability and act as a fail-safe. In essence, it allows for multiple component failures to happen before the whole system fails and in the case of RAID systems, the components are the drives.

- Mirroring is when the same data is duplicated from one drive to another. Striping is another method where data is written across multiple disks. Different RAID setups use one or more of these techniques, depending on system requirements.

Based on these principles these standard RAID levels have been developed:

RAID 0 - Uses ‘striping’ and is the most basic RAID level. It offers no redundancy but it does increase performance. Data is striped across at least two disks and with every disk added, read/write performance and storage capacity is increased over a single drive. If one drive fails, there’s no way of the RAID controller rebuilding it.

RAID 1 - Uses ‘mirroring’, which as the name suggests, mirrors the same data across two disks, therefore it provides the lowest level of RAID redundancy. RAID 1 can double read performance over a single drive, but it gives no increase in write speed. This level allows for one drive to fail.

RAID 5 – This is a common configuration and it gives a decent compromise between reliability and performance. It provides a gain in read speeds but no increase in write performance. RAID 5 introduces ‘parity’, which takes up the space of one disk in total. This level can handle one disk failure. If you have a hot spare configured as a 5th drive, this can sit as an idle drive in the system with no data saved to it. If one disk fails, the data can be rebuilt to the hot spare by using the data in the parity across the other drives. Once the data has finished rebuilding you can then remove the failed drive and replace it with a new one, which becomes the new hot spare.

RAID 6 – This takes the concept of RAID 5 and adds further redundancy with dual-parity. It allows for data to be recreated even if two disks fail within the array. The dual-parity is spread across all the disks and takes the space of two drives.

Over the last 30 years many more RAID levels have been developed mainly by RAID system manufacturers. Today we have RAID levels ranging from RAID 0 all the way to RAID 61 and beyond, with larger companies creating bespoke RAID levels to support different applications and infrastructure requirements.

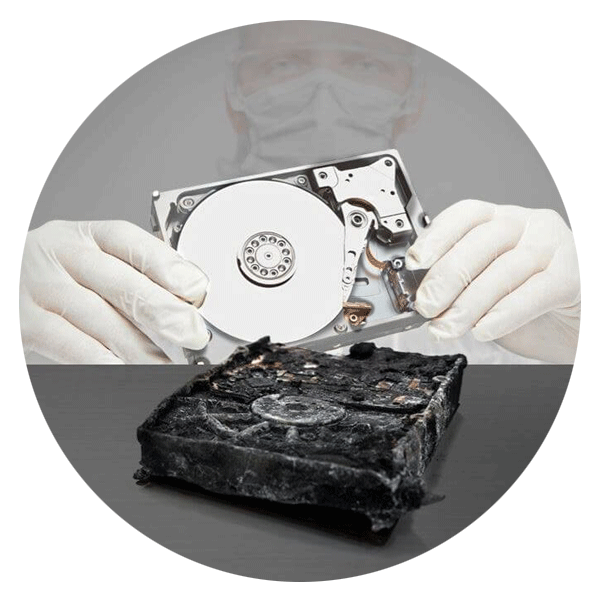

Dangers of RAID systems and dealing with drive failures

If a disk fails in a RAID 1 or RAID 5 the user should not exchange it with a new one before being sure that all data from the remaining drives are backed up. In many cases, if the RAID system used disks that came out of the production line at the same time, the possibility that another drive will also fail soon is quite large. This is just one of the inherent dangers of RAID systems.

Even with all the performance and data security benefits RAID offers, what many users (especially home consumers) forget and what everybody should keep in mind is that having a RAID system is not the same as having a backup.

RAID can be used in combination with backups and thus making the whole storage system much more secure, but a RAID is never to be used instead of a backup. On the contrary, when a RAID system fails – for example because of a malfunctioning hardware RAID controller or more drives failing than the selected RAID level is geared for -, it is much more complicated to both get the RAID up and running again as well as to recover any lost data when hit by such an incident.

NAS systems have become more and more affordable to home users, they use the build-in RAID levels in combination with other advanced storage technologies like deduplication to get as much space as possible out of their system. This comes at a price: in many cases these systems are wrongly set up and when a failure arises, then the whole system breaks down. In these cases, data recovery experts like Ontrack attempt to reconstruct several data layers from the many technologies the user has implemented until the original data can be recovered.

Before setting up a RAID array, all users – regardless of whether they are a consumer or an enterprise IT administrator – should carefully consider if RAID is the best way to go at all and which RAID level suits their needs best. Remember: negligence in the beginning can result in serious problems, high costs and possible data loss later!

With this advice in mind, there is a huge possibility that RAID will have many more years of life left in it. Even with many other, intuitive methods of storing data coming to market, it will most likely take a long time for RAID systems to vanish from the modern world of IT.

Do you use RAID systems at home or at your organisation? What type of system do you have any why? Let us know by tweeting @OntrackUKIE

Picture copyright: Paul-Georg Meister / pixelio.de