What does the future hold for hard disk drives?

Even though sales of SSD-based media is on the rise, traditional magnetic hard disk (HDD) media are still quite popular both for private users as well as for businesses. Even though SSD prices have decreased over the last few years, HDDs are still the cheaper option as far as storage space is concerned.

The producers of traditional HDDs know that they will soon have to deliver technology advances to ensure SSDs don’t turn HDDs obsolete. Regardless that there is a huge difference in SSD versus HDD technologies (by which the life expectancy of the SSD is decreased every time data is written on the chips), the main reasons for choosing a HDD are:

- price per terabyte and

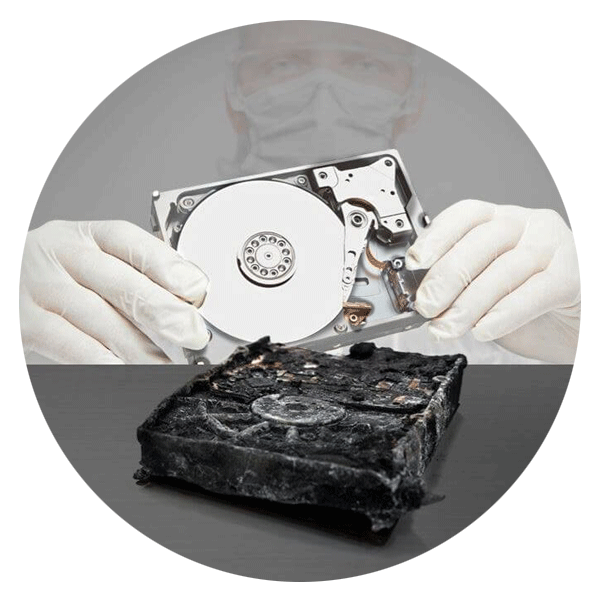

- easier data recovery after a data loss

But these advantages compared to SSD technology can vanish in time so producers are eager to develop new hard disk technologies to keep or widen the gap between them.

The technological evolution of HDDs

Since IBM introduced the first hard disk drive in 1956, the industry has increased storage capacity exponentially to meet an ever-growing need. With the introduction of consumer electronic devices that record and play videos in 4k and higher, as well as the processing of Big Data in enterprises, the demand for storage is growing even further.

For decades HDD manufacturers have focused on a method called Longitudinal Recording Technology (LTR) to record data on drives. In LTR, the magnetisation of each data bit (i.e. the binary digit 0 or 1) is aligned horizontally, parallel to the disk (or disks) that spin inside the hard drive.

The problem with using this method is that we are rapidly approaching the point where the microscopic magnetic grains on the disk become so tiny that they could start to interfere with one another, thus losing their ability to hold their magnetic orientations. The resulting data corruption could render a hard drive unreliable and thus unusable. This phenomenon is known as the superparamagnetic effect (SPE). Improved coercivity, or the ability of a bit to retain its magnetic charge, is needed to overcome SPE.

That’s why Perpendicular Magnetic Recording (PMR)- was first introduced into the market in August of 2005 by Toshiba (only a couple of months later Western Digital and Seagate followed suite with their PMR HDD products.) In PMR the magnetic dipole moments, each of which represents a logic bit along with a common logical writing method as PRML, are not parallel to the surface of the disc (longitudinal), but rather perpendicular to it. So the data is recorded, to a certain extent, depth wise. This results in potentially much higher data density (about three times denser) than with its precursor LRT: with this technology the same surface can accommodate much more data.

But there is also a drawback to this technology: the smaller Weiss’ districts (the magnetised domains in the crystals of a ferromagnetic material) need a shorter distance between the read/write head and the magnetic surface to be able to still write or read the data. Therefore this technique is difficult to utilise and will easily reach its natural end since the size of the head cannot be designed any smaller.

Even with this problem though, PMR is, to date, still the standard for recording data to hard disk drives. The move to PMR has increased the maximum platter density from a magnitude of about 100 Gb per square inch to 1000 Gb. However now, alas, we're beginning to hit the limits of PMR. We now see 8 TB hard disk drives with 6(!) platters inside hitting the market. Experts expect to see 10 TB HDDs to go to market with PMR technology, but whether the producers are able to downsize the heads beyond this point even more and add more platters into a HDD is yet to be seen.

To solve this problem a new technology which is still based on the original PMR method (the so-called PMR+) was invented in 2013: Shingled Magnetic Recording or SMR. This technology raises disk density in order to gain a 25 % jump in capacity. Simply put, it increases the number of data tracks per inch by squeezing them together so they overlap slightly like shingles on the roof of a house.

This new method also presents a problem: writing a new track can in some circumstances affect an adjacent track so severely that it has to be rewritten. To avoid this, the total SMR area of the HDD needs to be replaced from the point of change. There is usually a gap between the tracks which prevents the need to refresh from continuing. The necessary refresh of the neighbouring tracks reduces the writing speed in general, so SMR offers more space but less speed.

Image source: Tim Reckmann / pixelio.de